"I'm a bit uneasy sending my private conversations to an AI server...", "It's useless when the internet is down!", "The monthly subscription fee is a burden..." Have you ever had these thoughts? Behind the convenience of cloud AI, these concerns definitely exist. But what if you could run your own AI, on your own computer, for free, without any of these worries? KT's Korean-specialized LLM 'midm' and a magical tool called 'LM Studio' have teamed up to make this dream a reality. Grab a cup of coffee and join us on a journey to transform your PC into a state-of-the-art AI server! 🚀

1. Why is Local AI Suddenly a Big Deal? (feat. Privacy & Free) 🕵️♂️

The AI we commonly use, like ChatGPT or Claude, runs on countless computers in massive data centers. When we send a question, it travels over the internet to the server, and we receive the answer back. 'Local AI' is a concept that brings this entire process inside the computer on your desk. Since your data never leaves your machine, concerns about privacy or corporate secret leaks are completely eliminated. There's no need for an internet connection, and there are no monthly subscription fees. You get complete ownership and control—it's truly 'yours'.

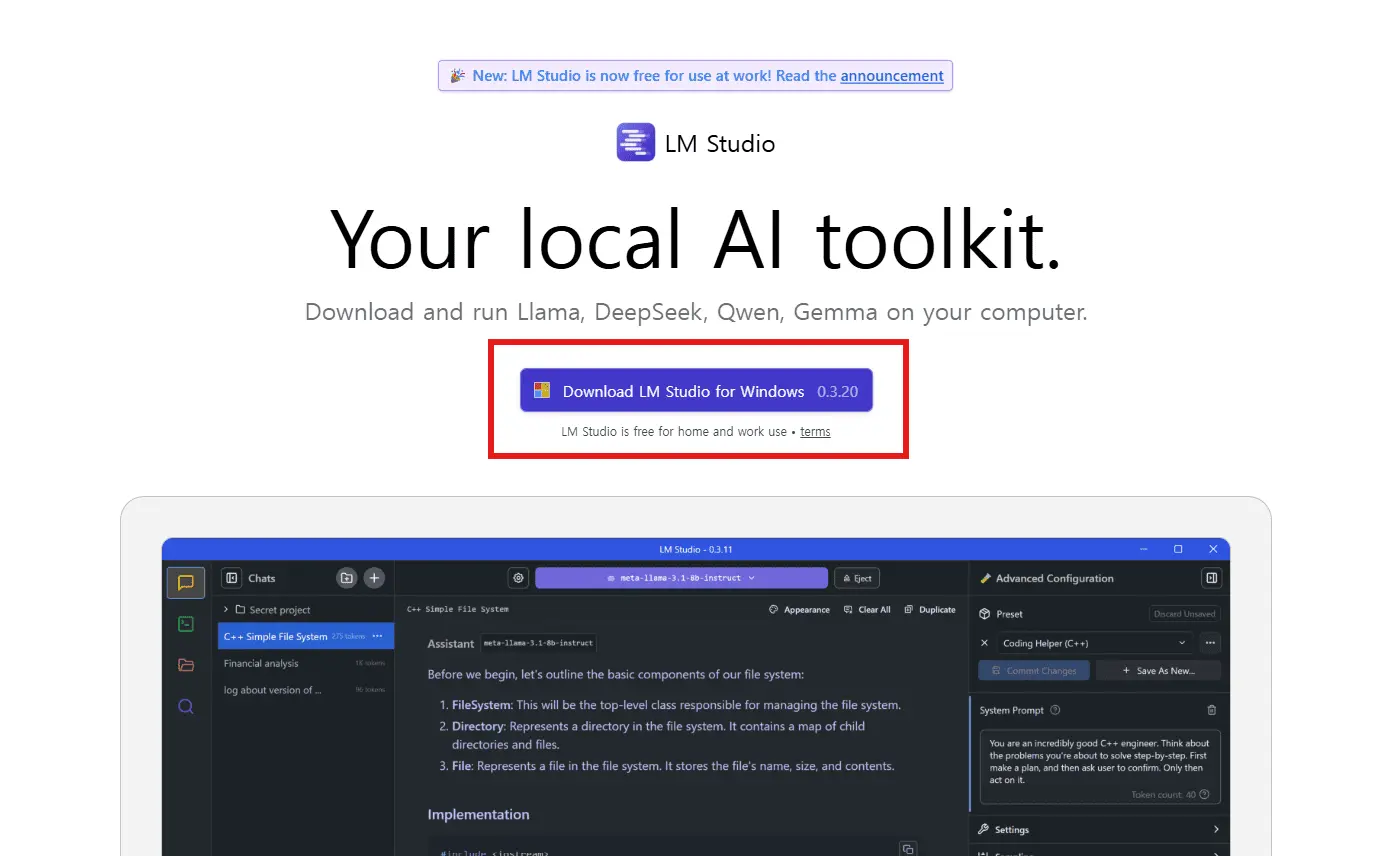

2. An AI Playground for Beginners: Installing LM Studio 🏟️

Running a local AI used to require complex setups, but everything changed with the arrival of LM Studio. It's a revolutionary program that lets you install and run LLMs as easily as downloading an app from an app store, with no coding knowledge required.

Installation is simple. Go to the official LM Studio website, download the file for your operating system, and run it. That's it! A minimum of 16GB of system memory (RAM) is recommended, but with the 'quantized' models we'll discuss today, you can enjoy it even with 8GB of RAM.

3. Moving the Korean AI 'midm' into Your PC (Step-by-Step) 🏠

Now that LM Studio is installed, it's time to invite today's star, 'midm'.

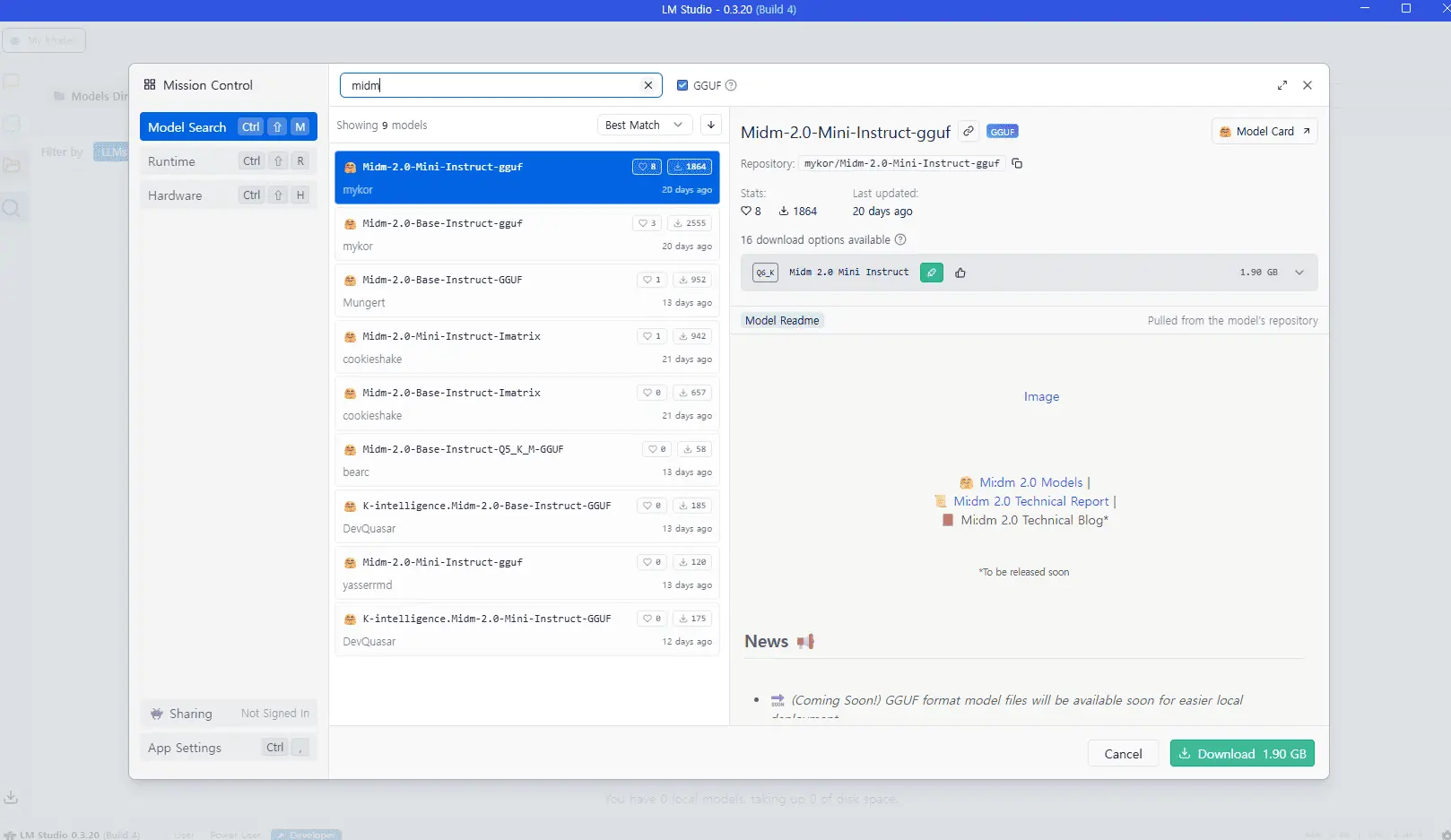

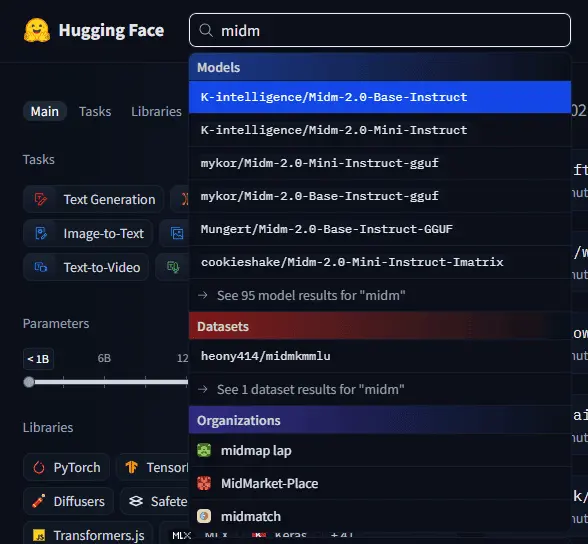

- Search for the model: Launch LM Studio and click the home icon (search) on the left menu. In the search bar, type

K-intelligence/Midm. The models officially shared by KT will appear. - Select the model: Look for a model with GGUF in its name, like

Midm-2.0-Base-Instruct-GGUF. GGUF is a versatile file format optimized for local PC environments. - Choose a version and download: When you click the model, a list of files will appear on the right. You'll see cryptic names like F16, Q8_0, Q4_K_M, etc. (Don't worry, we'll explain them all in the next section!) For now, click the 'Download' button next to the Q4_K_M or Q5_K_M version. It's the ideal starting point for balancing size and performance.

- Prepare for chat: Once the download is complete, click the speech bubble icon (chat) on the left menu. Click the 'Select a model to load' button at the top of the screen and choose the

midmmodel you just downloaded. You're all set!

What is GGUF Quantization?

Simply put, it's 'AI dieting'. It's a technology that dramatically reduces file size and memory usage while minimizing performance degradation. Thanks to this, we can run large language models on personal PCs without needing high-spec servers.

4. "Q2? Q8? F16?" Decoding the Mysterious Model Files! 🔬

You were probably confused by the cryptic file names in the download list. If you understand what these names, which represent the 'Quantization' level, mean, you'll be able to pick the perfect model for your PC's specifications.

| Version Name | Description | Recommended For |

|---|---|---|

| F32 / F16 | Unquantized original (F32) or half-precision (F16) version. Offers the best quality but has a very large file size and demands huge amounts of RAM. | High-performance workstations, professionals. |

| Q8_0 | 8-bit quantization. Shows almost no quality difference from F16 but is much smaller. An excellent choice when you don't want to sacrifice quality. | Users with 32GB+ RAM, quality-focused. |

| Q6_K | 6-bit quantization. An excellent balance between quality and performance. | Users with 16GB+ RAM, balance-focused. |

| Q5_K_M | 5-bit quantization. Many experts consider this the 'king of value'. It delivers outstanding performance for a moderate size. | Users with 16GB RAM, the optimal choice. |

| Q4_K_M | 4-bit quantization. The most popular version, runs smoothly even on standard laptops (16GB RAM). Highly recommended for beginners. | Users with 8GB-16GB RAM, beginners. |

| Q3_K_S / Q2_K | 3-bit/2-bit quantization. The file size is very small, but the performance degradation can be noticeable. You might feel 'Hey, this isn't very smart'. | Older PCs with very limited RAM, experimental use. |

*Glossary: 'K' signifies the use of a more advanced "K-Means" quantization method. 'S', 'M', and 'L' stand for Small, Medium, and Large, indicating minor quality differences within the same bit level. 'M' is generally the safest bet.

5. Hands-On: In-depth Local Testing of 'midm' (feat. Q4_K_M) 🕵️♀️

Alright, let's really see how smart 'midm' is. I'll be conducting a full test on my M2 MacBook Air (16GB RAM) using the Q4_K_M model.

5.1. Testing Korean Nuance: Homegrown is Best!

First, I checked its ability to grasp nuance, the pride of a native Korean model. I presented a common workplace scenario.

# Prompt

"Please draft an email to our client. The content is that we've made a final decision to go with design draft B out of A, B, and C. However, please include a nuance that respects the opinion of Manager Kim from the other team, who strongly pushed for draft A, so he doesn't feel bad."

# Midm AI's Response (Summary)

Subject: Regarding the Final Selection of the Design Draft

(Introduction)... All the drafts you sent showed deep consideration and excellent sense. In particular, the creative approach of draft A gave us a lot of inspiration. Thanks to Manager Kim's discerning eye, the quality of our project was elevated.

After a long internal discussion, we have decided to proceed with draft B, which aligns best with our project's direction. ... (Conclusion)

Wow, I'm truly impressed. 👏 It doesn't just convey the facts; it uses the rhetoric of a seasoned professional, praising draft A to be considerate of the other person's feelings. This kind of subtle emotional intelligence is an area where foreign models often struggle.

5.2. Creativity and Coding Test: Potential and Limitations

Next, I checked its creative and technical abilities. I requested a simple Python script.

# Prompt

"Create a Python code that unzips all .zip files in my Downloads folder and then deletes the original zip files."

# Midm AI's Response

import os

import zipfile

download_folder = 'C:/Users/YourUser/Downloads' # Please change this to your actual path

for filename in os.listdir(download_folder):

if filename.endswith('.zip'):

zip_path = os.path.join(download_folder, filename)

try:

with zipfile.ZipFile(zip_path, 'r') as zip_ref:

# Specify the folder to extract to (e.g., a folder with the same name as the zip file)

extract_path = os.path.join(download_folder, filename[:-4])

os.makedirs(extract_path, exist_ok=True)

zip_ref.extractall(extract_path)

print(f"Finished unzipping '{filename}'.")

os.remove(zip_path) # Delete the original zip file

print(f"Deleted original file '{filename}'.")

except zipfile.BadZipFile:

print(f"'{filename}' is not a valid zip file.")

except Exception as e:

print(f"An error occurred while processing '{filename}': {e}")

It produced pretty decent code. I was impressed by its attention to details like error handling. However, it did struggle with more complex algorithms or code involving the latest libraries. Its creative writing ability was decent but tended to use predictable, cliché expressions at times.

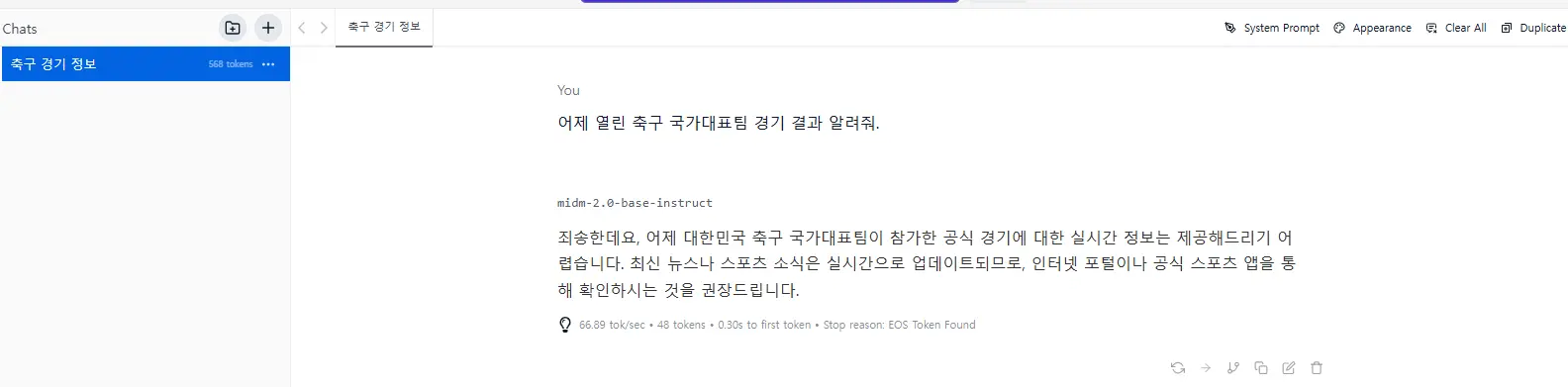

5.3. Limit Testing: Recent Information and Complex Reasoning

Finally, I checked the clear limitations of a local model.

"Tell me the results of the national soccer team's match that was held yesterday."

The result? Of course, it doesn't know. 'Midm' was trained on data up to 2023 and is not connected to the internet. This should be understood not as a disadvantage of local models, but as a characteristic of using built-in knowledge rather than real-time information.

6. Bonus: Tweaking LM Studio Settings (Creating Your Own AI) 🎨

The real fun of LM Studio lies in its 'settings'. You can change the AI's personality by adjusting a few values in the right-hand panel of the chat window.

- System Prompt: This is an instruction that defines the AI's basic role. For example, if you input, "You are an AI assistant who speaks like a king from the Joseon Dynasty," the AI's tone will become solemn. If you say, "You must answer everything in English," it instantly becomes an English teacher.

- Temperature (Creativity Level): The higher the value (e.g., 1.2), the more creative and unpredictable the AI's answers become. The lower it is (e.g., 0.5), the more deterministic and consistent the answers. Try raising it when writing poetry and lowering it when summarizing a report.

Playing around with these settings to create your own AI persona is one of the best ways to enjoy local AI.

Conclusion: Hurray for AI Independence! What's Next? 🥳

Congratulations! You have now achieved 'AI independence', free from cloud dependency. With KT's 'midm' and LM Studio, your PC has been reborn as a powerful productivity tool and an AI laboratory for exploring limitless possibilities.

Based on what you've learned today, test various models, quantization versions, and LM Studio settings to find the combination that works best for you. This is the greatest joy of local AI. Now, your creativity and imagination are the only limits. Enjoy your AI journey! 😉