The AI world has been flipped upside down again. Moonshot AI's KIMI AI, especially the latest Kimi K2 Thinking model, has claimed SOTA on multiple benchmarks, outpacing GPT-5 and Claude Sonnet 4.5. This 1 trillion parameter open-source agent model handles 256K context and 300 tool calls in one go. "Can I really use this for free?" is the reaction flooding in. Let's dive into why.

What is KIMI AI? Moonshot AI's Ambition

KIMI AI is a series of large language models (LLMs) developed by Chinese startup Moonshot AI. Since its debut in 2023, it's been famous for long-context handling. Early versions supported 2 million Chinese characters (~128K-256K tokens), dominating document summarization and analysis.

The latest Kimi K2 Thinking uses MoE architecture with 1T total parameters and 32B active. Open-sourced on Hugging Face for local runs. Backed by Alibaba, Moonshot aims to "make everyone superhuman."

Why KIMI now? While OpenAI and Anthropic's closed models are expensive and limited, KIMI offers free web chat and cheap API. Tops HLE 44.9% and BrowseComp 60.2%.

"Kimi K2 Thinking beats Claude and GPT-5 in coding and reasoning. Hard to believe it's open-source." – Reddit r/LocalLLaMA user

Core Features of Kimi K2 Thinking

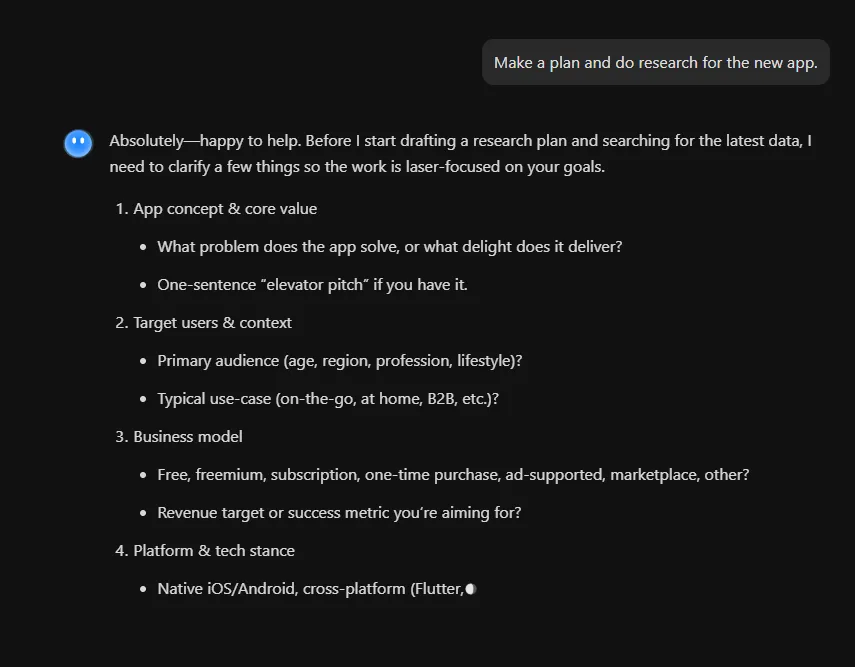

KIMI's true power is as a 'thinking agent.' It interleaves step-by-step reasoning with tool calls, enabling 200-300 consecutive calls. No more single-response bots—it plans, executes, verifies.

- Ultra-Long Context (256K tokens): Analyzes novels or 500-page docs in one shot. 90%+ summary accuracy.

- Agentic Tool Use: Native browser, filesystem, terminal. "Build a website" → code to deployment.

- Coding/Math SOTA: SWE-Bench 71.3%, LiveCodeBench top scores. Bug fixes, algo optimization.

- Multimodal: Image/video understanding via Kimi-VL, 128K vision context.

Key Takeaway

Kimi K2 Thinking scales 'test-time': more thinking tokens + tool turns for complex tasks. Best performance-per-cost!

Shines in OK Computer mode: "Make a dashboard" → full interactive UI from data.

Web, API, Local Run: Hands-On Guide

1. Web Chatbot (Free): Visit kimi.moonshot.cn. Upload files (50+ formats), say "summarize." Generous daily limits.

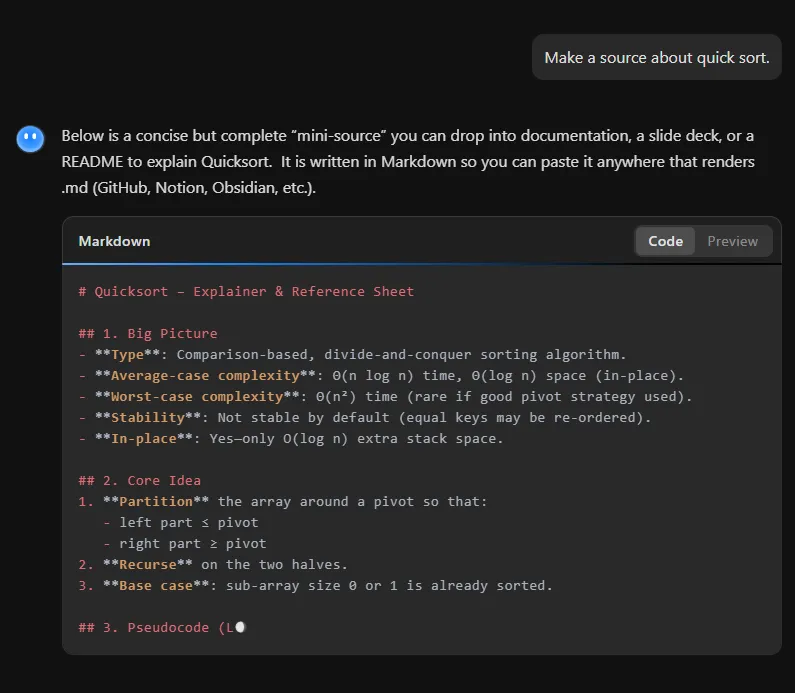

# Quick start

curl -X POST https://api.moonshot.cn/v1/chat/completions \

-H "Authorization: Bearer YOUR_API_KEY" \

-d '{

"model": "kimi-k2-thinking",

"messages": [{"role": "user", "content": "Implement quicksort in Python"}]

}'2. API: Get key at platform.moonshot.cn. $0.15/M input, $2.5/M output—1/10th Claude. OpenAI compatible.

3. Local Run (Open-Source): Download from Hugging Face. Run with llama.cpp or Unsloth on single RTX 4090. INT4 quantization for efficiency.

# Hugging Face download & run

git clone https://huggingface.co/moonshotai/Kimi-K2-Thinking

# llama.cpp inference

./llama-cli --model Kimi-K2-Thinking-Q4.gguf -p "Hello, Kimi!"

GPT, Claude, Gemini Comparison Table

Compared via benchmarks and real metrics. Kimi K2 Thinking leads in coding/reasoning.

| Model | Context | HLE (%) | SWE-Bench (%) | Input/Output Price ($/M) | Open Source |

|---|---|---|---|---|---|

| Kimi K2 Thinking | 256K | 44.9 | 71.3 | 0.15 / 2.5 | Yes |

| GPT-5 | 128K | ~40 | ~65 | 3 / 15 | No |

| Claude Sonnet 4.5 | 200K | 42 | 68 | 3 / 15 | No |

| Gemini 2.5 Pro | 1M+ | 38 | 62 | ~2 / 10 | No |

KIMI wins: 1/10 cost, open-source, superior agentics. Slight speed trade-off.

Pros/Cons & Real User Reviews (Reddit/X)

Pros:

- Human-like writing: "As natural as Claude" (Reddit)

- Coding expert: Full app builds, bug fixes

- Cost-effective: Free web + cheap API

- Agent stability: 300 tool calls no drift

Cons:

- TTFT delay: 2-3x slower

- Server overload: Peak wait times

- Multimodal weaker: Text-focused

User voices:

"Refactored code for 4 hours with Kimi K2 Thinking. Others gave up." – X user

"Reddit calls it 'best coding agent.' Human-like and stable." – r/LocalLLaMA

Conclusion: 10x Your Productivity with KIMI

KIMI AI isn't just a chatbot—it's a reasoning agent previewing the future. Start at kimi.moonshot.cn today. Local for privacy, API for apps. China's AI wave is here—transform your workflow now.

Questions? Comment below! Next: Building projects with Kimi.