On February 11, 2026, Beijing-based AI startup Z.ai (Zhipu AI) dropped a bombshell on the global AI industry. Unveiling the massive 744B parameter MoE model GLM-5, they achieved the most powerful performance in open-source AI model history. What's even more astonishing is that this model was trained entirely on Huawei Ascend chips—without a single NVIDIA GPU. We dive deep into China's counterattack against GPT-5.2 and Claude Opus 4.5.

1. The Arrival of GLM-5: Unmasking Pony Alpha 🦄

In early 2026, the AI community buzzed with the appearance of a mystery model. Named "Pony Alpha" on OpenRouter, this model threatened Claude Opus 4.5 and GPT-5.2 in coding benchmarks, sparking questions like "What on earth is this?" On Reddit, memes about "ponies running" with unicorn emojis went viral, while Discord channels launched investigations to uncover its identity.

💡 Key Point: Pony Alpha = GLM-5

On February 11, 2026, Z.ai officially revealed that Pony Alpha was a stealth test version of GLM-5. This marked one of the first cases of a Chinese AI company using the classic "stealth launch" strategy to gather feedback from developers worldwide.

Z.ai (Zhipu AI), spun off from Tsinghua University in 2019, is China's premier AI startup. Listed on the Hong Kong Stock Exchange in January 2026, they raised approximately $550 million (₩735 billion), which was directly invested in GLM-5 development. They hold the title of China's first publicly listed foundation model company.

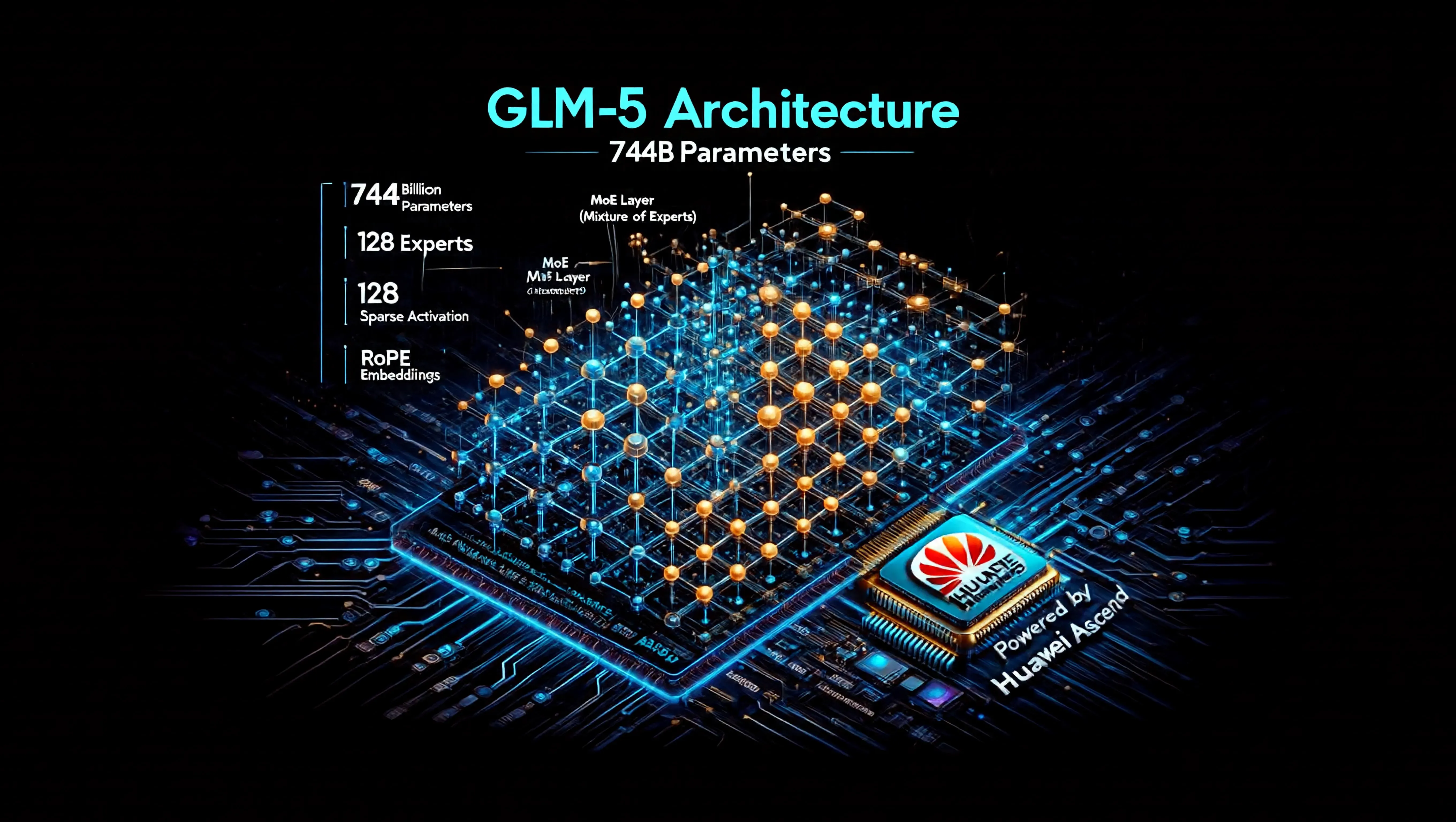

2. 744B Parameters: Deep Technical Analysis 🏗️

The most striking feature of GLM-5 is its enormous scale. Expanding from 355B parameters in its predecessor GLM-4.5 to 744B, it stands as one of the largest open-source models ever released.

2.1 MoE (Mixture of Experts) Architecture

GLM-5 adopts the Mixture of Experts (MoE) architecture to maximize efficiency. Of the 744B parameters, only 40B are activated at a time, significantly reducing computational costs while maintaining the performance of a massive model.

| Component | GLM-5 Spec | Description |

|---|---|---|

| Number of Experts | 256 | 8 experts activated per token |

| Context Window | 200K tokens | ~150,000 words (Korean standard) |

| Max Output Length | 128K tokens | Industry-leading long-form generation |

| Attention Mechanism | DSA (DeepSeek Sparse Attention) | Revolutionary long-context efficiency |

2.2 DeepSeek Sparse Attention (DSA) Integration

GLM-5 is the first to integrate the DeepSeek Sparse Attention (DSA) mechanism. This solves the O(n²) computational complexity problem of traditional Dense Attention, dramatically reducing memory and computation costs while processing long contexts.

🔬 How DSA Works

Instead of attending to all past tokens, DSA uses a learned scoring function to selectively attend to only the top-K KV positions. This is done through a lightweight "Indexer" filtering layer, maintaining long-context capabilities while slashing memory and computation costs.

2.3 "Slime" Asynchronous RL Framework

Z.ai developed "Slime", a new asynchronous Reinforcement Learning (RL) infrastructure for training GLM-5. Designed to solve the "long tail" bottleneck of traditional RL, this framework brings the following innovations:

- Asynchronous Trajectory Generation: Breaks traditional RL's synchronous lockstep, generating trajectories independently for improved training efficiency

- APRIL (Active Partial Rollouts): Optimizes the generation bottleneck (90%+) at the system level

- Three-Module Architecture: Megatron-LM based training module, SGLang-based rollout module, central data buffer

- Multi-Turn Compilation Feedback: Provides a robust training environment for complex agentic tasks

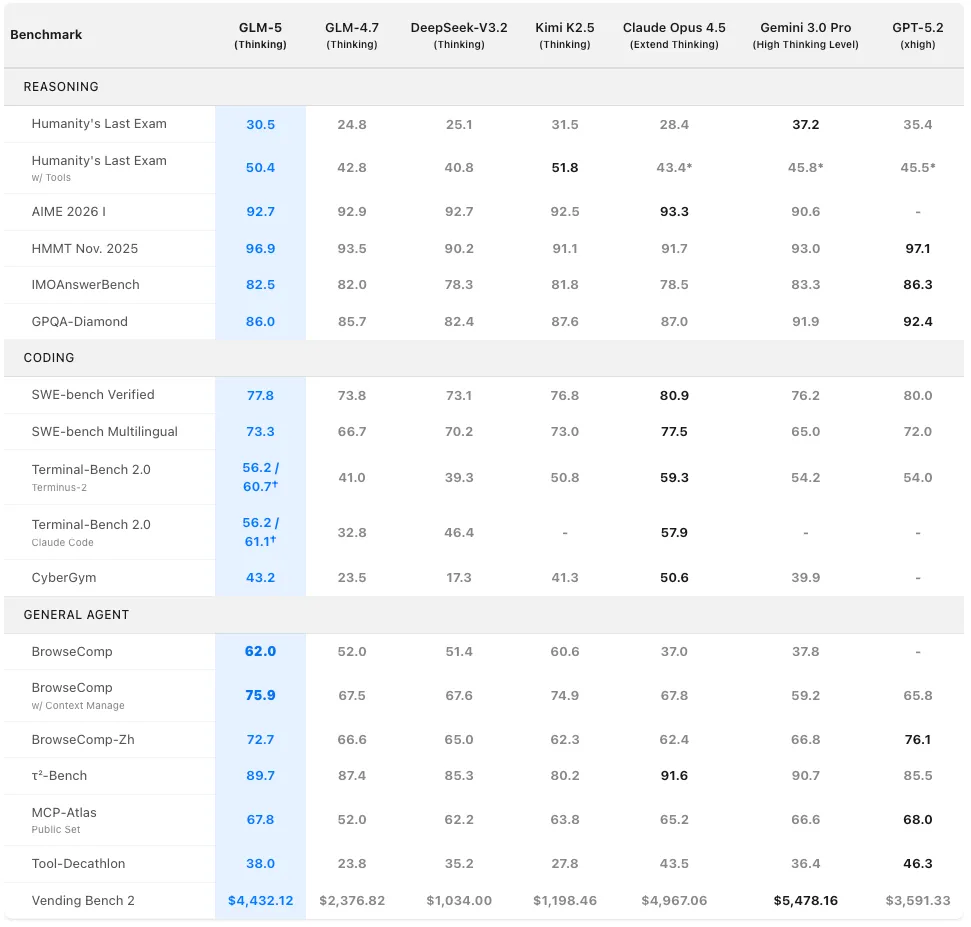

3. Benchmark Wars: The Reign of Open-Source SOTA 🏆

GLM-5's arrival shook up the benchmark leaderboards. On the Artificial Analysis Intelligence Index v4.0, it became the first open-source model to break 50 points, surpassing all competitors including Kimi K2.5, DeepSeek V3.2, and MiniMax 2.1.

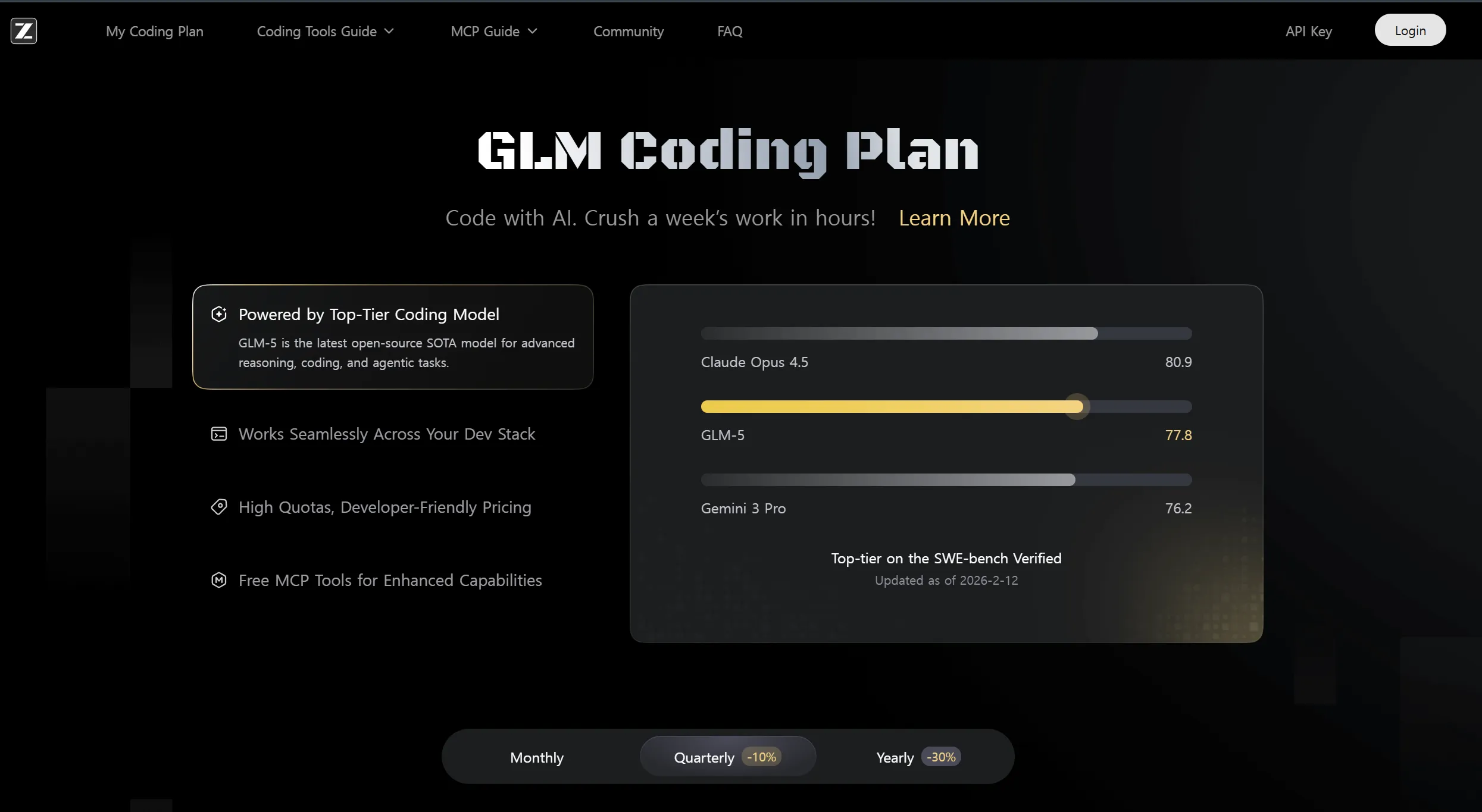

3.1 Coding Benchmark: SWE-bench Verified

On SWE-bench Verified, measuring software engineering capabilities, GLM-5 achieved 77.8%, securing the top spot among open-source models.

| Model | SWE-bench Verified | Terminal-Bench 2.0 | Humanity's Last Exam |

|---|---|---|---|

| GLM-5 🥇 | 77.8% | 56.2% / 60.7%† | 50.4% (w/ tools) |

| Claude Opus 4.5 | 80.9% | 59.3% | 43.4% (w/ tools) |

| GPT-5.2 (high) | 76.2% | 54.2% | 45.8% (w/ tools) |

| Gemini 3 Pro | 80.0% | 54.0% | 45.5% (w/ tools) |

| Kimi K2.5 | 72.5% | 52.1% | 44.2% (w/ tools) |

† Verified version (Terminal-Bench 2.0 Verified)

3.2 Long-Term Agent Benchmark: Vending Bench 2

On Vending Bench 2, simulating one year of vending machine operations, GLM-5 ranked #1 among open-source models with a final balance of $4,432, demonstrating long-term planning and resource management capabilities.

3.3 Hallucination Index: Industry Record Low

On the Artificial Analysis Omniscience Index, GLM-5 scored -1 point, achieving the lowest hallucination rate among global AI models. This indicates the model's "intellectual humility"—knowing when it doesn't know.

🧠 Hallucination Rate Comparison (Lower is Better)

- GLM-5: -1 points (New Record 🏆)

- GLM-4.7: 34 points

- Claude Opus 4.5: 12 points

- GPT-5.2: 18 points

- Gemini 3 Pro: 22 points

4. Trained on Huawei Ascend: Symbol of Chinese AI Independence 🇨🇳

The most politically significant aspect of GLM-5 is its training infrastructure. Added to the US Commerce Department's "Entity List" in January 2025, Z.ai lost access to NVIDIA H100/H200 GPUs. Yet they succeeded in training a frontier-grade model using only Huawei Ascend chips and the MindSpore framework.

"GLM-5 has achieved complete independence from US-manufactured semiconductors. This is a milestone proving that China can achieve self-sufficiency in large-scale AI infrastructure."

— Z.ai Official Statement4.1 Domestic Chip Compatibility

GLM-5 ensures compatibility with Chinese domestic chips even in the inference phase:

Z.ai developed an inference engine that guarantees high throughput and low latency on domestic chip clusters through low-level operator optimization. This is a crucial step toward complete independence for China's AI ecosystem.

4.2 Geopolitical Implications

GLM-5's success questions the effectiveness of US semiconductor export controls. With China proven capable of developing frontier AI on domestic chips, the balance of power in the global AI industry is shifting. Developing nations in particular may consider moving away from NVIDIA dependence toward a more accessible and affordable Chinese ecosystem.

5. Agentic Engineering: Beyond Vibe Coding 🤖

Z.ai defines GLM-5 as "the transition from Vibe Coding to Agentic Engineering." This signifies AI's evolution beyond simple code generation—designing complex systems and performing long-term tasks.

5.1 System 2 Thinking (Deep Reasoning)

GLM-5 analyzes complex problems step-by-step through "Thinking" mode. This implements System 2 thinking from Daniel Kahneman's "Thinking, Fast and Slow" in AI.

import zai

client = zai.ZAI(api_key="your-api-key")

response = client.chat.completions.create(

model="GLM-5",

messages=[{"role": "user", "content": "Refactor the user auth module to support OAuth2.0"}],

thinking={"type": "enabled"} # Enable System 2 thinking

)

# reasoning_content: AI's thought process

# content: Final generated code5.2 Autonomous Debugging & Self-Correction

GLM-5 goes beyond code generation to analyze logs, identify root causes, and iteratively fix compile or runtime errors. It features a powerful self-correction mechanism ensuring end-to-end system execution.

🔄 Agentic Loop

- Understand and decompose goals (Architect-level approach)

- Generate and execute code

- Detect errors and analyze logs

- Identify root causes

- Fix and retry (iterate)

- Verify successful execution

5.3 Coding Agent Integration

GLM-5 is compatible with 20+ coding agents including Claude Code, OpenCode, Kilo Code, Roo Code, Cline, and Droid. Subscribe to Z.ai's GLM Coding Plan to use GLM-5 across all these tools.

6. Office Automation: The Document Revolution 📄

One of GLM-5's most practical innovations is its native office document generation capability. Through Z.ai's "Agent Mode," prompts can be directly converted to .docx, .pdf, and .xlsx files.

6.1 Supported Document Formats

PRDs, Plans, Reports

Financial Reports, Proposals

Data Analysis, Spreadsheets

6.2 Real-World Use Cases

Handle complex tasks with a single sentence:

- Financial Reports: "Analyze this quarter's revenue data and create a PDF report with charts"

- Educational Materials: "Create a calculus lesson plan with exam questions for 11th graders in Word format"

- Sponsorship Proposals: "Write a professional investment proposal for an AI startup"

- Operations Manuals: "Create a cafe opening checklist and shift rules in table format"

"Foundation models are evolving from 'conversation' to 'work.' Like office tools for knowledge workers, they are becoming programming tools for engineers."

— Z.ai Blog6.3 Multi-Turn Collaboration

Z.ai's Agent Mode goes beyond document generation— progressively improving documents through multi-turn conversation. Instructions like "add a table here," "change the font," or "explain this section in more detail" produce highly polished final outputs.

7. Price Disruption: 6x Cheaper Frontier Model 💰

One of GLM-5's most attractive aspects is its disruptive pricing. At 6x cheaper input tokens and 10x cheaper output tokens compared to Claude Opus 4.6, it delivers comparable performance.

7.1 Price Comparison Table

| Model | Input ($/1M tokens) | Output ($/1M tokens) | Total Cost (1M in + 1M out) |

|---|---|---|---|

| GLM-5 🏆 | $0.80 ~ $1.00 | $2.56 ~ $3.20 | $4.20 |

| DeepSeek V3.2 | $0.28 | $0.42 | $0.70 |

| Kimi K2.5 | $0.60 | $3.00 | $3.60 |

| GPT-5.2 | $1.75 | $14.00 | $15.75 |

| Claude Sonnet 4.5 | $3.00 | $15.00 | $18.00 |

| Claude Opus 4.6 | $5.00 | $25.00 | $30.00 |

💡 Cost Efficiency Analysis

According to WaveSpeedAI's early testing, GLM-5 can complete in a single pass what GLM-4.7 needed two attempts for. This means actual cost efficiency. "When a job requires two GLM-4.7 passes, one GLM-5 pass delivers cost efficiency."

7.2 GLM Coding Plan Subscription Options

Z.ai offers the GLM Coding Plan for developers. It provides 3x, 5x, and 4x the usage of Claude Pro plans, with 30% discount for annual subscriptions.

- Lightweight workloads

- GLM-4.7 support

- 20+ coding tools compatible

- Complex workloads

- 40-60% faster speed

- Vision, Web Search MCP

- GLM-5 support

- Peak time performance guarantee

- Early access to new features

8. Real User Reactions: Reddit & Discord Analysis 💬

How did the global developer community react to GLM-5's arrival? We analyzed actual user reactions from r/LocalLLaMA, r/singularity, and OpenRouter's Discord channels.

8.1 Reddit Reactions

"This isn't just another Chinese model. A 744B parameter model trained on Huawei chips matching Claude Opus is a seismic shift in the AI industry."

— r/LocalLLaMA, u/AIResearcher2026"GLM-5's 'intellectual humility' is impressive. An AI that admits when it doesn't know something? The -1 hallucination score is truly revolutionary."

— r/singularity, u/TechFuturist"So Pony Alpha was GLM-5 all along... I knew something was different when testing on OpenRouter. The coding performance is insane."

— r/OpenRouter, u/CodeMaster_CN8.2 Discord Community Reactions

The official OpenRouter Discord channel saw heated discussions about GLM-5's performance:

- Performance Assessment: "80% the price of Claude Opus 4.5 for 95% the performance" was the dominant evaluation

- Coding Ability: "Exceptional ability to handle complex refactoring tasks in one go"

- Document Generation: "Direct PDF output is a game-changer. Workflows will completely change"

- Chinese Processing: "Unlike English-centric models, it perfectly understands Chinese context"

8.3 Criticisms and Concerns

Alongside positive reactions, some concerns were raised:

⚠️ Community Concerns

- Censorship Concerns: Potential bias on certain topics as a Chinese company model

- Sustainability: Impact of profitability pressure post-Hong Kong listing on model quality

- Ecosystem: Maturity of Huawei/domestic chip ecosystem vs NVIDIA ecosystem

- Global Accessibility: Potential API access restrictions in some regions

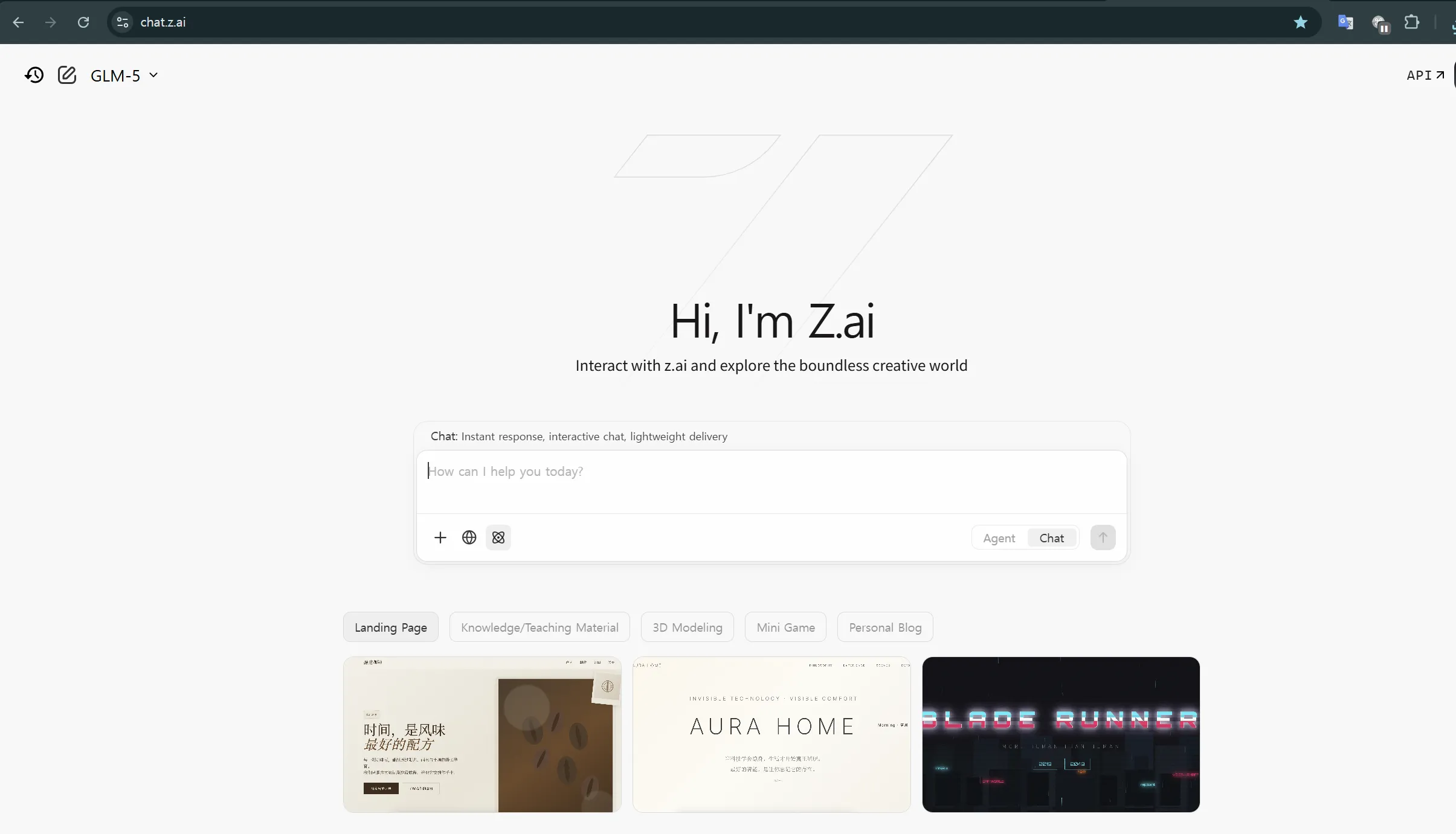

9. Setup & Usage Guide: Building Your Own GLM-5 🛠️

Want to try GLM-5 yourself? You can easily get started through the Z.ai platform and OpenRouter.

9.1 Z.ai Platform Sign-up & API Key

Step 1: Sign up for Z.ai

Visit z.ai and complete registration with email. Google/GitHub accounts also supported.

Step 2: Get API Key

Go to Dashboard → API Keys menu and generate a new API key. Copy and store it securely immediately.

Step 3: Choose a Plan

Start with free credits ($18 for new sign-ups) or subscribe to GLM Coding Plan.

Step 4: First API Call

Start your first conversation with GLM-5 using the example code below.

9.2 Python SDK Installation & Usage

# 1. Install Z.ai Python SDK

pip install zai

# 2. Basic usage example

import zai

client = zai.ZAI(api_key="your-api-key-here")

# Regular chat

response = client.chat.completions.create(

model="GLM-5",

messages=[

{"role": "system", "content": "You are a professional software engineer."},

{"role": "user", "content": "Refactor the user auth module to support OAuth2.0"}

]

)

print(response.choices[0].message.content)

# Deep Reasoning (Thinking) mode

response_thinking = client.chat.completions.create(

model="GLM-5",

messages=[{"role": "user", "content": "Solve this complex algorithm problem"}],

thinking={"type": "enabled"}

)

# reasoning_content: View AI's thought process

print(response_thinking.choices[0].message.reasoning_content)9.3 Access via OpenRouter

Using GLM-5 through OpenRouter enables easier comparison testing with various models:

# OpenRouter API example

import requests

response = requests.post(

"https://openrouter.ai/api/v1/chat/completions",

headers={

"Authorization": "Bearer YOUR_OPENROUTER_KEY",

"Content-Type": "application/json"

},

json={

"model": "zhipuai/glm-5",

"messages": [

{"role": "user", "content": "Hello!"}

]

}

)

result = response.json()

print(result["choices"][0]["message"]["content"])9.4 Coding Agent Integration

To use GLM-5 with Claude Code, Cline, Roo Code, etc.:

🔧 Claude Code Setup

- Install Claude Code:

npm install -g @anthropic-ai/claude-code - Subscribe to GLM Coding Plan and get API key

- Set Z.ai API as Custom Endpoint in Claude Code settings

- Enter Model ID:

GLM-5

10. Future Outlook: Changing the AI Landscape 🔮

GLM-5's arrival signals more than just a model release— it heralds a seismic shift in the global AI industry. The era has opened where China stands shoulder-to-shoulder with the US in frontier AI.

10.1 New Standard for Open-Source AI

GLM-5 has redefined what open-source models can be. Previously seen as "cheaper alternatives to commercial models," they are now "mainstream options surpassing commercial models in both performance and price."

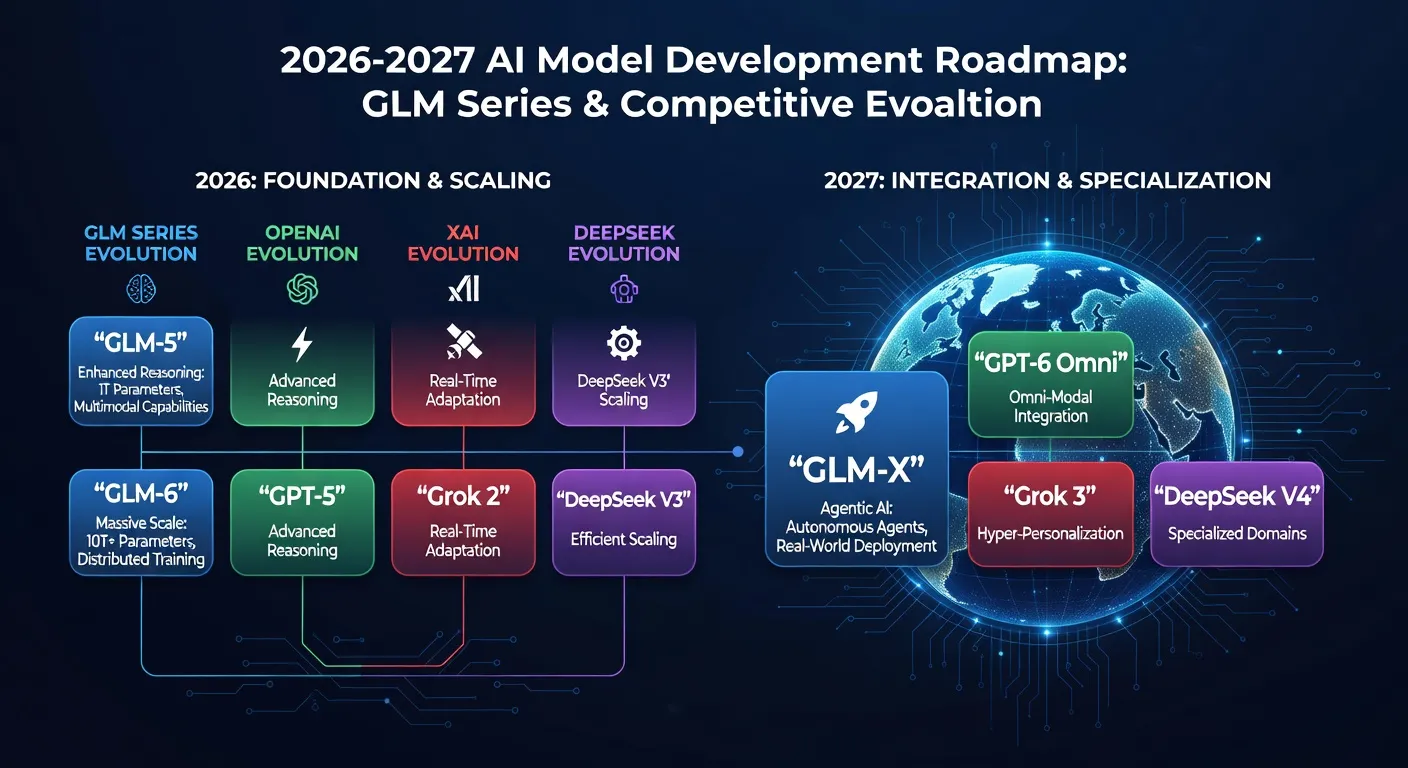

10.2 Technical Evolution Roadmap

Z.ai has presented the following roadmap beyond GLM-5:

- GLM-5.5 (Q2 2026): Enhanced multimodal capabilities, image/video understanding and generation

- GLM-6 (Q4 2026): Breaking 1T parameters, real-time agentic task support

- GLM-7 (2027): Targeting AGI-level reasoning, autonomous scientific research

10.3 Geopolitical Implications

GLM-5's success will accelerate two major trends: "AI Democratization" and "Technological Sovereignty":

🌐 AI Democratization

Developing nations can now move away from NVIDIA dependence toward a more accessible and affordable Chinese ecosystem. This will accelerate the global spread of AI technology.

🛡️ Technological Sovereignty

Europe, India, Brazil and others will accelerate development of their own models based on open-source models like GLM-5 to secure national AI sovereignty.

"GLM-5 is China's declaration of independence in AI. US sanctions have only made China stronger. The world must now prepare for a bifurcated AI ecosystem."

— MIT Technology Review, February 202610.4 Lessons for Developers

GLM-5's arrival conveys the following messages to developers:

- Model-Agnostic Development: Design flexible architectures not tied to specific vendors

- Cost Optimization: Actively explore models with superior price-performance ratios

- Multi-Model Strategy: Ability to select optimal models based on task characteristics

- Open Source Contribution: Participate in community-centered AI ecosystems

Key Takeaways: 5 Revolutions Brought by GLM-5

- Scale Revolution: First appearance of 744B parameter open-source model

- Independence Revolution: Successfully training frontier AI on Huawei chips alone

- Price Revolution: 6x cheaper than Claude Opus with frontier performance

- Accuracy Revolution: -1 hallucination rate, industry record low

- Productivity Revolution: All-in-one AI assistant from document generation to coding