On February 16, 2026, just a week before China's Lunar New Year holiday, Alibaba dropped a bombshell on the AI industry. Enter Qwen 3.5, the next-generation AI model boasting a staggering 39.7 billion parameters in its MoE (Mixture of Experts) architecture. While activating only 1.7 billion parameters during actual inference, this efficient design delivers performance rivaling GPT-4, Claude Opus 4.5, and Gemini 3 Pro. Moving beyond simple chatbots, this monster model targets the Agentic AI era. Let's dive deep into everything about this groundbreaking model.

The Arrival of Qwen 3.5: A New Chapter in China's AI War

On February 16, 2026, Alibaba Cloud officially launched Qwen 3.5 through its Model Studio platform. The timing was no coincidence. With just a week before China's biggest holiday, the Lunar New Year, Chinese tech giants like ByteDance (Doubao 2.0), Zhipu AI, and MiniMax were already engaged in fierce competition with successive model releases.

Especially after DeepSeek's R1 model sent shockwaves through the global AI market last year, Alibaba quickly responded with Qwen 2.5-Max to maintain its position in China's AI landscape. Now, Qwen 3.5 represents more than just an upgrade—it's Alibaba's ambitious declaration of the "Agentic AI era," signaling AI's evolution beyond conversation to autonomous agents.

Key Takeaway

Qwen 3.5 comes in two versions: the open-source Qwen3.5-397B-A17B and the cloud-based API service Qwen3.5-Plus. Notably, the open-source version uses a MoE structure activating only 17 billion out of 397 billion parameters, achieving superior performance to Qwen3-32B with less than 10% of the training cost.

Eddie Wu's AI Investment Promise

Alibaba Group CEO Eddie Wu Yongming has pledged to invest over 380 billion yuan (approximately $53 billion) in AI and cloud infrastructure between 2025-2028. This represents Alibaba's largest infrastructure investment in history, signaling aggressive R&D in the coming years.

Qwen 3.5 is the fruit of this massive investment. Particularly significant is that this model goes beyond being a language model, featuring built-in Vision-Language integration that allows it to directly understand and process images and videos without separate VL models.

"Qwen 3.5 is designed for the Agentic AI era. Our goal is to help developers and enterprises move faster and achieve more with the same computing power." – Alibaba Cloud Official Statement

Architecture Deep Dive: The Secret of 397B MoE

Qwen 3.5's most distinctive feature is undoubtedly the harmony between its massive scale and efficiency. With 39.7 billion total parameters, it activates only 1.7 billion during actual inference using the Mixture of Experts (MoE) architecture. Think of it like a hospital's specialist system— not every doctor treats every disease, but specialists in each field are deployed only when needed.

Innovations in Qwen3-Next Architecture

Qwen 3.5 builds upon the Qwen3-Next architecture, an evolution of the original Qwen3. Key innovations include:

- Hybrid Attention Mechanism: Enables efficient attention computation even in long contexts, achieving 10x+ throughput improvement for sequences over 32K tokens.

- High-Sparsity MoE Structure: Combined with Gated Delta Networks to achieve high-throughput inference with minimal latency and cost overhead.

- Multi-Token Prediction: Significantly improves inference speed by predicting multiple tokens at once.

- Training Stability Optimization: Solves instability issues in large model training to maximize training efficiency.

Expanded Language Support: From 119 to 201

Qwen 3.5 expands support from 119 to 201 languages and dialects, reflecting Alibaba's global strategy for worldwide deployment. Enhanced support for minority languages in Southeast Asia, Africa, and the Middle East contributes to the democratization of AI.

# Qwen 3.5 model loading example (Hugging Face Transformers)

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3.5-397B-A17B"

# Load tokenizer and model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# Run inference

prompt = "Explain the concept of Mixture of Experts in AI"

messages = [{"role": "user", "content": prompt}]

text = tokenizer.apply_chat_template(messages, tokenize=False)

inputs = tokenizer(text, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_new_tokens=512)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))Multimodal Integration: Vision-Language Harmony

While previous Qwen3 required separate models for text and vision processing (VL variants), Qwen 3.5 provides a unified vision-language foundation. Through initial fusion training on trillions of multimodal tokens, it achieves performance surpassing Qwen3-VL models in the base model itself.

Particularly notable is its ability to analyze videos up to 2 hours long. This far exceeds the few minutes to tens of minutes limitation of existing AI models, promising revolutionary changes for analyzing long educational videos or meeting recordings.

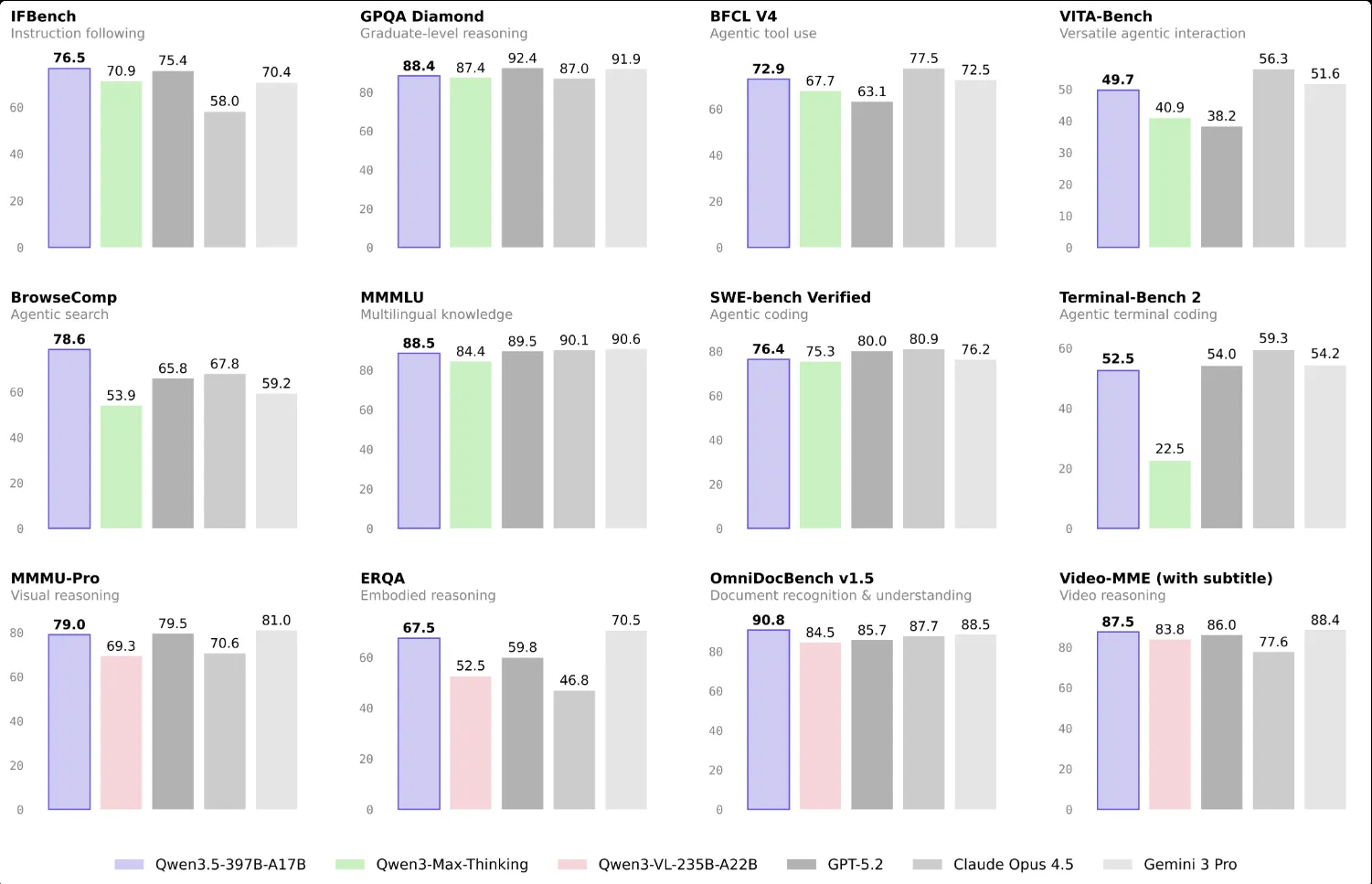

Benchmark Wars: Surpassing GPT-4 and Claude

Qwen 3.5's performance has already been verified through benchmark results released by Alibaba. Particularly noteworthy is that the 397B model shows superior performance to Qwen3-Max-Thinking (over 1 trillion parameters). This demonstrates the efficiency of the MoE architecture while presenting a new paradigm for future AI model development.

Key Benchmark Results

| Benchmark | Qwen 3.5 | GPT-5.2 | Claude Opus 4.5 | Gemini 3 Pro |

|---|---|---|---|---|

| ArenaHard (Reasoning) | 95.6 | 94.2 | 93.8 | 96.4 |

| AIME'25 (Math) | 81.4 | 79.5 | 80.1 | 82.0 |

| LiveCodeBench (Coding) | 70.7 | 68.3 | 69.5 | 72.1 |

| CodeForces Elo | 2056 | 1987 | 2012 | 2034 |

| SWE-Bench Verified | 63.8 | 61.2 | 65.4 | 62.9 |

| LiveBench (General) | 77.1 | 75.8 | 76.3 | 78.5 |

As shown in the table, Qwen 3.5 demonstrates excellent performance particularly in coding, mathematics, and competitive programming. A CodeForces Elo score of 2056 corresponds to the top 1% programmer level—a remarkable achievement.

Detailed Benchmark Analysis

🧮 Mathematical Reasoning: AIME Series

AIME (American Invitational Mathematics Examination) measures ability to solve high-difficulty high school-level math problems. Qwen 3.5 recorded 85.7% on AIME'24 and 81.4% on AIME'25, surpassing DeepSeek-R1, Grok 3, and o3-mini. However, it fell slightly short of Gemini 2.5 Pro.

💻 Coding Ability: LiveCodeBench & CodeForces

Scoring 70.7 on the real-time coding benchmark LiveCodeBench, Qwen 3.5 has proven its code generation capabilities in conditions similar to actual development environments. Particularly impressive is its CodeForces Elo rating equivalent of 2056, placing it on par with professional algorithm competitors.

# Python code example generated by Qwen 3.5 - Dynamic Programming

def longest_increasing_subsequence(nums):

"""

Calculate Longest Increasing Subsequence (LIS) in O(n log n)

"""

from bisect import bisect_left

tails = []

for num in nums:

idx = bisect_left(tails, num)

if idx == len(tails):

tails.append(num)

else:

tails[idx] = num

return len(tails)

# Test

print(longest_increasing_subsequence([10, 9, 2, 5, 3, 7, 101, 18]))

# Output: 4 ([2, 3, 7, 101])🌍 Multilingual Support: MultiIF

On the multilingual reasoning benchmark MultiIF, Qwen 3.5 scored 73.0. This reflects Alibaba's commitment to meeting the needs of non-English speaking users, which English-centric models often overlook.

Benchmark Interpretation Traps

Benchmark scores are important reference indicators but may differ from real-world performance. Particularly, Qwen 3.5 is optimized for Chinese and Asian languages, so it likely delivers better actual performance for users in those regions than benchmarks suggest.

The Age of Agentic AI: How Qwen 3.5 Changes Development

Viewing Qwen 3.5 as merely a chatbot or coding assistant would be a grave mistake. Alibaba officially states that this model is "designed for the Agentic AI era," meaning AI evolves beyond conversation to become an agent that sets its own goals, uses tools, and performs multi-step tasks.

Visual Agentic Capabilities

One of Qwen 3.5's most innovative features is its visual agentic capabilities. This means the model can independently operate mobile and desktop applications—essentially clicking, scrolling, and typing on smartphones or computers on behalf of users.

Qwen Code: Jarvis in Your Terminal

Alongside Qwen 3.5, Alibaba released Qwen Code, an open-source AI agent that works in the terminal. As an alternative to Claude Code, it helps with large codebase understanding, automating tedious tasks, and rapid deployment.

→ Analyzing project structure...

→ Identified 14 main modules

→ Dependency graph generated

/qwen refactor --target auth_module --pattern strategy

→ Refactoring authentication module...

→ Strategy pattern applied

→ Test coverage maintained at 94%

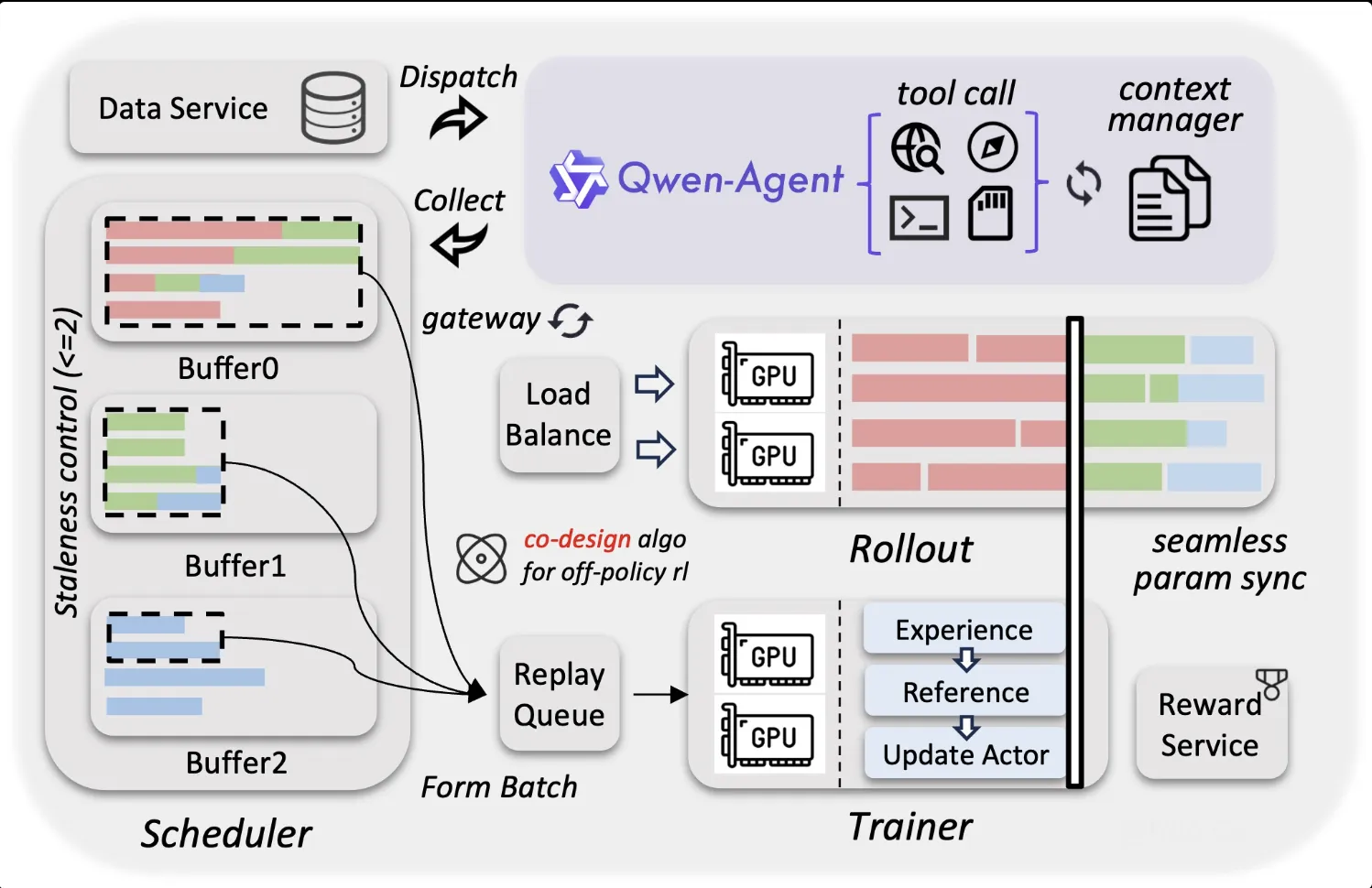

Qwen Agent: Multi-Agent Framework

Furthermore, through the Qwen-Agent framework, developers can build powerful LLM applications leveraging Qwen 3.5's instruction following, tool use, planning, and memory management capabilities. This is an essential tool for implementing systems where multiple AI agents collaborate rather than relying on a single AI.

Task Analysis (Planning)

Decomposes complex user requests into subtasks and determines execution order.

Tool Selection (Tool Use)

Selects appropriate tools from 15+ options including web search, code execution, and file manipulation.

Execution & Verification

Executes selected tools, verifies results, and retries when necessary.

Result Integration

Synthesizes results from all subtasks into a coherent response.

Scaled Reinforcement Learning: Million-Agent Environment

Qwen 3.5 has undergone scaled reinforcement learning across millions of agent environments. Through progressively complex task distributions, it has been trained to robustly adapt to diverse real-world situations. This provides practical adaptability that existing models lack.

Practical Guide: From Installation to Optimization

Qwen 3.5 can be used in two ways: the open-source model running locally, or the cloud service through Alibaba Cloud's API. Let's examine the pros and cons of each approach and how to set them up.

Method 1: Local Open Source Model Execution

Running the 397B model locally requires substantial computing resources. Even with FP8 quantization, approximately 400GB+ of GPU memory is needed, so most individual users will realistically need to use reduced versions or quantized models.

# Download model from Hugging Face (requires git-lfs)

git lfs install

git clone https://huggingface.co/Qwen/Qwen3.5-397B-A17B

# Or use huggingface-cli

huggingface-cli download Qwen/Qwen3.5-397B-A17B --local-dir ./qwen3.5

# Run high-speed inference server with vLLM

python -m vllm.entrypoints.openai.api_server \

--model Qwen/Qwen3.5-397B-A17B \

--tensor-parallel-size 8 \

--quantization fp8 \

--max-model-len 32768Hardware Requirements

| Configuration | FP16 (BF16) | FP8 | AWQ-INT4 |

|---|---|---|---|

| GPU Memory | ~800GB | ~400GB | ~200GB |

| Recommended GPU | 8x A100 80GB | 8x A100 40GB | 4x A100 40GB |

| Throughput (1K input) | 45 tok/s | 85 tok/s | 120 tok/s |

Method 2: Alibaba Cloud Model Studio API

For most developers, the recommended approach is using Alibaba Cloud's Model Studio. It provides an interface compatible with OpenAI and Anthropic APIs, making migration of existing applications very easy.

import openai

# Alibaba Cloud API configuration

client = openai.OpenAI(

api_key="your-dashscope-api-key",

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1"

)

# Call Qwen 3.5 Plus

response = client.chat.completions.create(

model="qwen3.5-plus",

messages=[

{"role": "system", "content": "You are a helpful AI assistant."},

{"role": "user", "content": "Explain quantum computing in simple terms"}

],

max_tokens=2048,

temperature=0.7

)

print(response.choices[0].message.content)Method 3: Local Execution with Ollama (Reduced Version)

For individual developers or small teams, execution through Ollama is also possible. However, given the 397B model's enormous size, use the smaller MoE models or quantized versions provided by the Qwen team.

# Install Ollama (macOS/Linux)

curl -fsSL https://ollama.com/install.sh | sh

# Download and run Qwen 3.5 model

ollama pull qwen3.5:70b

ollama run qwen3.5:70b

# Or smaller version

ollama pull qwen3.5:14b

ollama run qwen3.5:14bContext Window Optimization Tips

Qwen 3.5 Plus supports up to 1 million tokens of context. Use standard context for short tasks and large context options for long documents, but set only what you need considering costs. Pricing tiers change from 32K+.

Community Reactions: What Do Developers Actually Think?

Benchmark numbers are important, but real user experiences are often more valuable. We've gathered vivid reactions to Qwen 3.5 from various communities including Reddit, Hacker News, and GitHub Issues.

Positive Reactions

"I switched some tasks from Claude 3.5 Sonnet to Qwen 3.5, and the coding quality is surprisingly good. Especially for Python script writing, it outputs clean code without unnecessary explanations. At 1/10th the price of Claude, what more can you ask for?"

"Chinese-English translation produces more natural results than GPT-4. Alibaba's multilingual dataset definitely shines here. Support for 201 languages isn't just a number—it's actually usable."

Critical Opinions

"Running the 397B model locally is practically impossible. It's good via API, but calling it 'open source' is questionable. Most individual developers can only use 14B or 70B quantized versions, which don't deliver GPT-4 level performance."

"The agentic features are theoretically cool, but when you actually try UI manipulation, there are still many errors. Seems lower level than Claude's Computer Use. Not as good as expected, but excellent for what's provided free."

Expert Analysis

AI research community The Neuron Daily characterized Qwen 3.5 as a "killer model for Claude and GPT," analyzing that it shows particular strength in cost efficiency. They also forecast that the emergence of Qwen Code will spark new competition in the developer tools market.

Meanwhile, some security experts have raised data privacy concerns about using Chinese companies' AI models. Alibaba Cloud states that data can be processed outside China, but enterprise users should carefully review according to their own security policies.

Pricing and Competitiveness: Cost Efficiency Analysis

One of Qwen 3.5's most powerful weapons is its price. Alibaba boasts 60% lower operating costs and 8x higher large-scale workload processing capability compared to the previous generation.

API Price Comparison

| Model | Input (1M tokens) | Output (1M tokens) | Context |

|---|---|---|---|

| Qwen 3.5-Plus (0-32K) | $0.86 | $3.44 | 1M |

| Qwen 3.5-Plus (32-128K) | $1.43 | $5.73 | 1M |

| GPT-5 (Standard) | $1.25 | $10.00 | 400K |

| GPT-5 (Priority) | $2.50 | $20.00 | 400K |

| Claude Opus 4.1 | $15.00 | $75.00 | 200K |

| Gemini 3 Pro | $0.50 | $1.50 | 2M |

As shown, Qwen 3.5-Plus is offered at approximately 30-45% lower prices than GPT-5. The price difference is particularly stark compared to Claude Opus 4.1. While Gemini 3 Pro offers cheaper options, Qwen 3.5 often shows superior performance on specific benchmarks.

Total Cost of Ownership (TCO) Calculation Example

Assuming an application processing 10 million tokens per day:

- Qwen 3.5-Plus: ~$8,600/month (Input: $8,600, Output: $34,400)

- GPT-5 Standard: ~$12,500/month (Input: $12,500, Output: $100,000)

- Claude Opus 4.1: ~$90,000/month (Input: $150,000, Output: $750,000)

This calculation assumes a high output ratio scenario, but Qwen 3.5 could actually achieve over 90% monthly cost savings.

Cost Optimization Strategy

Use 32K or below context for short tasks, and choose appropriate tiers for 128K+ for long document analysis. Also, utilizing caching features can provide additional cost savings for repetitive queries.

Future Outlook: Beyond Qwen 3.5

The release of Qwen 3.5 demonstrates the maturity of China's AI ecosystem. If DeepSeek's R1 captured global market attention, Qwen 3.5 shows a differentiated path by presenting a balance between enterprise-grade performance and open-source accessibility.

Future Prospects: DeepSeek's Counterattack?

Industry insiders expect DeepSeek to release its next-generation model within days. Just as last year's R1 model caused seismic shifts in global tech stocks, competition among AI models is expected to intensify further.

Alibaba's AI Strategy

CEO Eddie Wu's 380 billion yuan investment pledge shows Alibaba is going all-in on AI infrastructure. Particularly, focus is expected on the following areas:

- Multimodal AI: More powerful image/video generation models following Qwen Image 2.0

- Edge AI: Lightweight model optimization for mobile devices

- Robotics: Embodied AI that interacts with the physical world

- Scientific AI: Specialized models for biology, chemistry, and physics research

Message to Developers

Qwen 3.5 sends the following messages to developers:

- The Power of Open Source: The era has come where even 397B massive models are released as open source.

- Cost Efficiency: High-performance AI is no longer impossible without large budgets.

- Global Accessibility: Support for 201 languages accelerates the democratization of AI.

- Agentic Paradigm: AI is now evolving from tools to colleagues.

The AI market in 2026 has entered a new phase with the emergence of Qwen 3.5. Whether this Chinese monster model can shake the thrones of GPT-4 and Claude, or remain just another challenger, time will tell. But one thing is certain: we are witnessing an interesting chapter in AI history.